what is METAVERSE?

What does the term 'METAVERSE' mean in our understanding?

A future space that goes beyond mimicking the real world to help people achieve things beyond the laws of physics.

A system that combines multiple senses and interactions to provide a more intuitive, immersive and productive experience.

why is XR the Potential Access Point?

As XR draws more attention from people, I noticed that currently, XR interactions focus on gestures and behaviors that are familiar to people from existing interfaces, but there is a lack of consistency overall.

However, XR has the potential to go beyond these traditional methods and create a more natural and universal VR interaction system.

What are the current interaction barriers in XR interactions?

Based on our usability study of the XR interaction, I found that the current XR interaction is insufficient and have summarized the findings into the following bullet points.

High learning costs

High learning costs due to the lack of natural and intuitive gestures

Limitation of interaction

Interactions in VR are limited by the traditional way we interact with the machines.

Interactions in each interface/App is different in VR. People is suffering to remember all of them and switch their habits based on the Apps.

We want to explore the possibilities of gestural interfaces to make them more usable, intuitive, and efficient.

target user

I researched the current VR/AR hardcore user, and designed a persona based on them.

Gestures should be easy to trigger and should be ergonomically designed.

According to Don Norman's article: Gestural Interfaces: A Step Backwards In Usability,

I setup the design principles and the information channels to help us brainstorming the interactions.

Select

Rotate

Move

Delete

Drag in space

Multi-select

Multi-selected

Group/Ungroup

Slicer

Selection

Scan

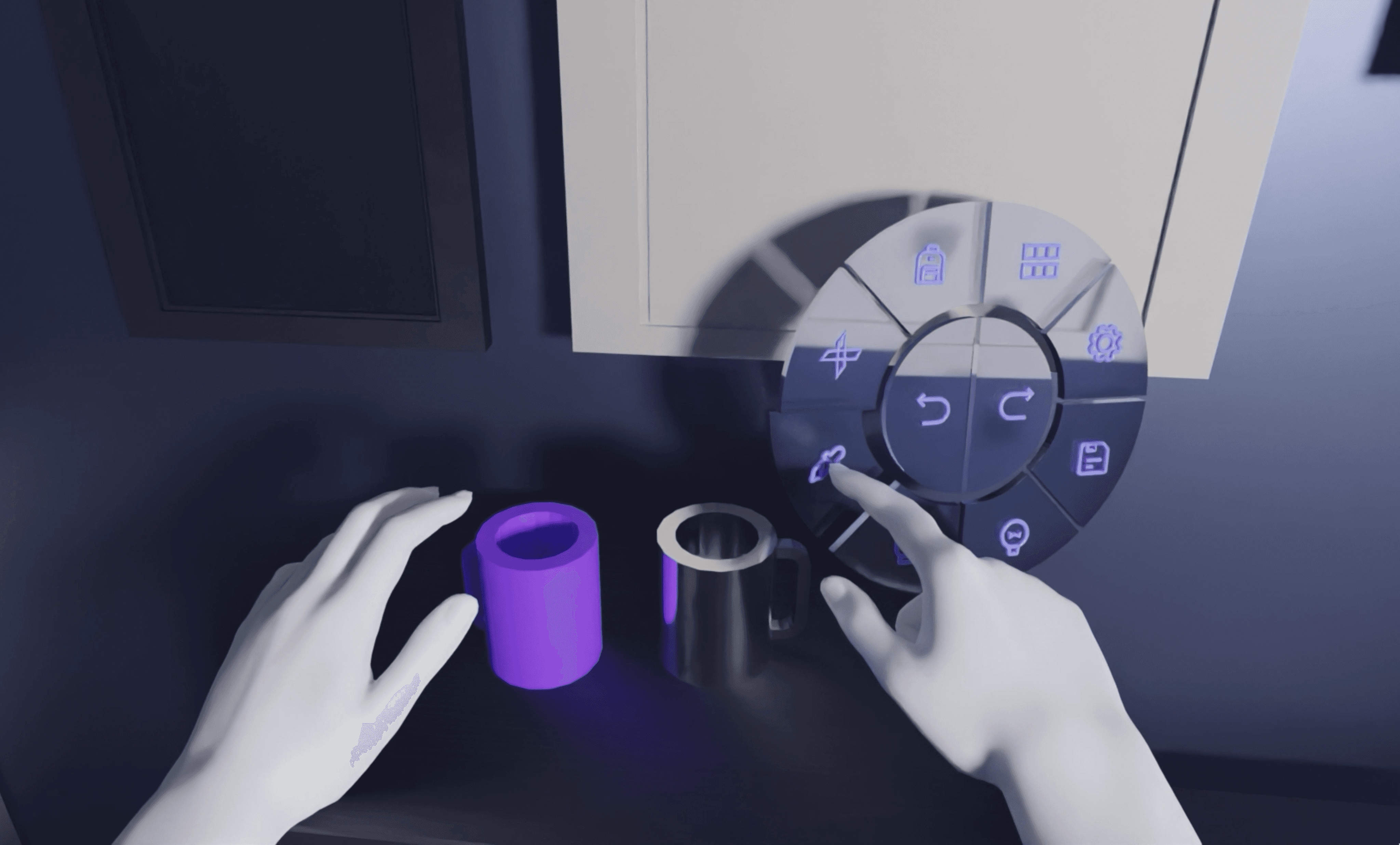

Menu

Picker/Eyedrop

storage

Natural Gestures

Gestures for editing

Gestures for virtual tools

I used these three questions to evaluate and decide on our final design among the iterations.

Which gesture is the most efficient?

Can the gestures be performed using one hand?

Which pose is the most ergonomic and requires the least effort?

Gesture Library

To design some intuitive gestures, I led the research that watched people's reactions to completing tasks in real life and recorded them.

Then I worked with another researcher to analyzed the results and pulled out some common themes to create our final set of gestures.

making the interaction more intuitive

Pull the handle on the pop-up menu

Using two hands to pull the handle on the object both way

Swipe the thumb on index finger

Swipe the hand

Pinch an object

Pinch multiple objects

Left swipe index and middle fingers

Grip hard

Grip hard

improve the efficiency of the gestures

In order to improve the efficiency of gestures, I studied the interactions in the current interfaces and improved the efficiency of each gestures in XR.

In result, I worked with the team developed the following gestures.

I was inspired by the great art design in other games such as “Control” and “Inside” and used Blender to create a 3D rendered experience for the game.

what i learned & The Impact

The infrastructure is to be built for the Metaverse. Just like the current screen based OS, I wish one day that our work can inspire people to design a universal interaction for all XR Apps.

The Future casting

- STEEPS MODEL

I used the steeps model to analyze current societal focus and predict future development based on news keywords related to XR, ARVR, and the Metaverse.

an attractive way of storytelling

To inspire XR designers to improve their XR interactions by allowing them to try out our gestures library in an immersive game environment.

INSPIRATIONS

From game “control”, “Inside”

OUR RENDERING

In Blender

Goal of the game

Players need to use various of the interactions to solve the puzzles and escape from the rooms.

LEVEL 1 ROOM

(Intuitive Interaction)

LEVEL 2 FACTORY

(Efficient Interaction)

© Copyright 2024 • All Rights Reserved

hi@haoran.io